About me

I am a Visiting Assistant Professor in the Department of Computer Science (CS) at Purdue University. I am also a Faculty Affiliate of the Governance and Responsible AI Lab (GRAIL) in Purdue University’s Department of Political Science. I earned my Ph.D. in Computer Science from Purdue University, advised by Dr. Dan Goldwasser. I obtained M.Sc. in Computer Science (CS) from Old Dominion University (ODU). I completed my B.Sc in Computer Science and Engineering (CSE) from Bangladesh University of Engineering and Technology (BUET).

[CV]

Research Overview

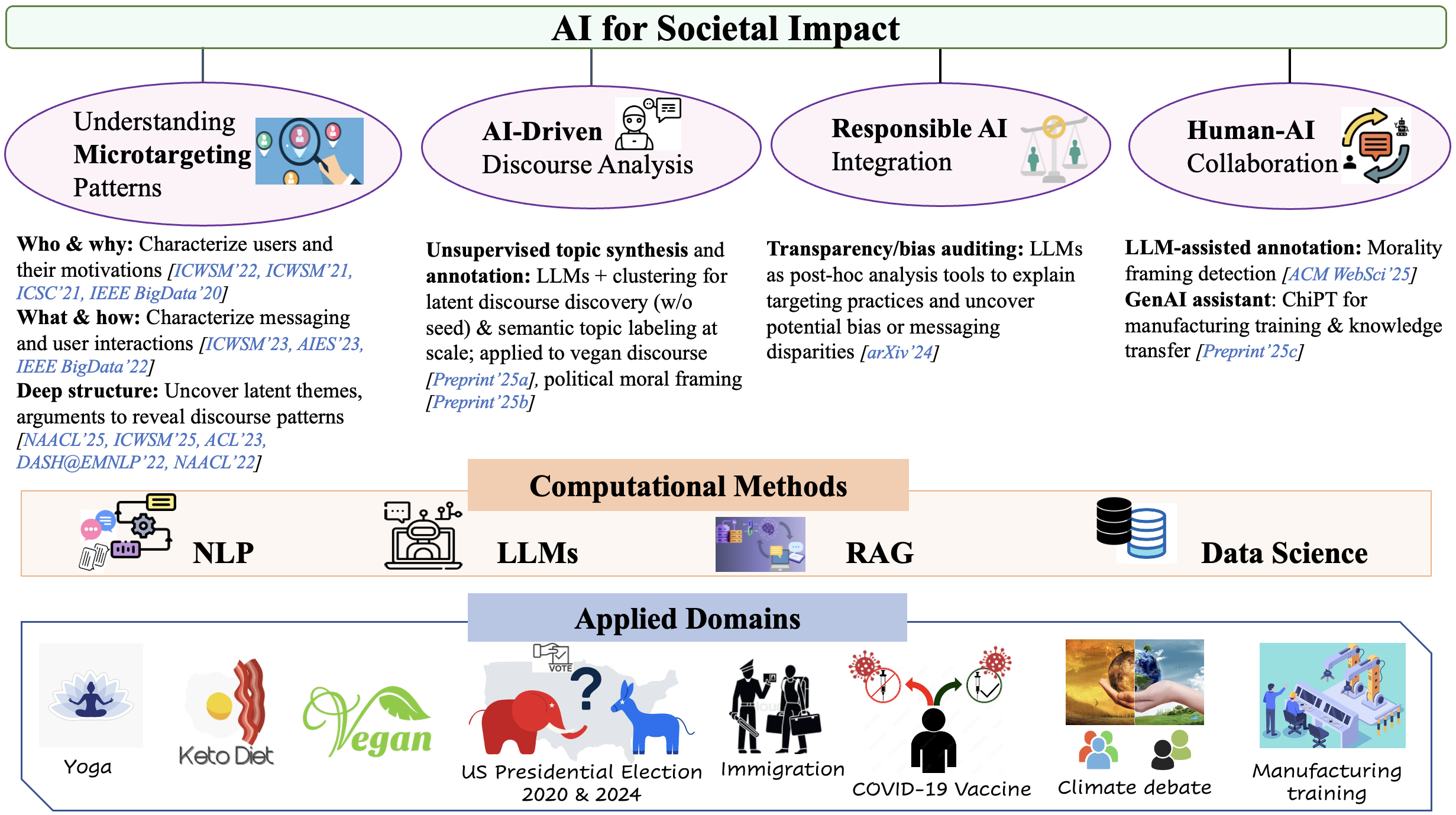

My research interests broadly lie in AI for Societal Impact, at the intersection of Natural Language Processing (NLP) and Computational Social Science (CSS). I design scalable, explainable, collaborative, and socially responsible AI methods to tackle challenges in microtargeting analysis, discourse understanding, responsible AI integration, and human–AI collaboration. These approaches are applied to socially significant domains, including elections, climate debates, vaccine debates, lifestyle choices (yoga, keto, veganism), immigration, manufacturing training, and AI Governance. See the research details here .

My research has been published in prominent venues such as AAAI ICWSM, NAACL, EMNLP, AAAI, ACL, AAAI/ACM AIES, ACM WebSci, IEEE Big Data. See my publication lists here .

Recent News

- 🎤November 11, 2025: Gave an invited talk at IEEE DISTILL 2025 Workshop on Distributed, Secure, and Trustworthy Intelligence with LLMs on “Understanding Microtargeting Patterns in the Era of LLMs”.

- November 04–09, 2025: Attended EMNLP 2025 and presented our work Post-hoc Study of Climate Microtargeting on Social Media Ads with LLMs: Thematic Insights and Fairness Evaluation.

- October 01, 2025: Area Chair (AC) of ACL Rolling Review (ARR).

- 📝 August 20, 2025: Long paper accepted to publish in Findings of EMNLP 2025.

- August 13, 2025: Tutorial Co-Chair ICWSM 2026. Please submit your proposal by January 15, 2026. cfp: https://www.icwsm.org/2026/submit.html

- June 23-26, 2025: Attended ICWSM 2025 and presented our work Discovering Latent Themes in Social Media Messaging: A Machine-in-the-Loop Approach Integrating LLMs.

- 🎤June 12, 2025: Gave an invited talk at School of Computing Technologies, RMIT University, Melbourne, Australia, on “Understanding and Analyzing Microtargeting Patterns on Social Media”. Thanks to Dr. Jenny Zhang for hosting me!

- May 22, 2025: Senior Program Committee (SPC) of ICWSM 2026.

- May 20-23, 2025: Attended WebSci’25 and presented our work Can LLMs Assist Annotators in Identifying Morality Frames? - Case Study on Vaccination Debate on Social Media.

- April 29-May 04, 2025: Attended NAACL 2025 and presented our work Uncovering Latent Arguments in Social Media Messaging by Employing LLMs-in-the-Loop Strategy. Also, I organized BoF session on “Understanding and Analyzing Microtargeting Patterns on Social Media”.

- April 15-16, 2025: Attended Midwest Speech and Language Days (MSLD) 2025 at the University of Notre Dame to present two posters.

- 🏆 April 11, 2025: Received Graduate Women in Science Program (WISP) award from College of Science, Purdue University.

- March 26, 2025: Organizer and Chair of Birds of a Feather (BoF) session in NAACL 2025 on “Understanding and Analyzing Microtargeting Patterns on Social Media”. Please join on May 2 (Friday), 9:00-10:30 MST, Room: 230 -Pecos, Albuquerque Convention Center.

- 🏆 March 19, 2025: Received NAACL 2025 Diversity and Inclusion Award (D&I Award).

- 📰 March 11, 2025: My research and interview were featured in AIhub.

- 🏆 February 26, 2025: My Ph.D. thesis proposal won the best poster award in 2025 AAAI/SIGAI Doctoral Consortium. [Award] [Announcement] [Photo]

- February 25-March 04, 2025: Attending AAAI 2025 and presenting my Ph.D. Thesis Proposal at AAAI-25 Doctoral Consortium.

- 🎓 February 07, 2025: Defended my Ph.D. dissertation.

- 📝 January 31, 2025: Long paper accepted to publish in ACM WebSci 2025.

- 📝 January 22, 2025: Long paper accepted to publish in Findings of NAACL 2025.

- 🏆 November 02, 2024: Ph.D. Thesis Proposal accepted at AAAI-25 Doctoral Consortium.

- October 29-31, 2024: Attended at 2024 Academic Data Science Alliance (ADSA) Annual Meeting and organized Tutorial on “Analyzing Microtargeting on Social Media”.

- October 04, 2024: Tutorial Co-Chair ICWSM 2025. Please submit your proposal by January 15, 2025. cfp: https://www.icwsm.org/2025/submit/index.html

- 📝 July 15, 2024: Long paper accepted to publish in ICWSM-2025.

- July 7, 2024: Session proposal accepted at 2024 Academic Data Science Alliance (ADSA) Annual Meeting.

- June 14-15, 2024: Attended 2024 Mexican NLP Summer School.

- June 12, 2024: Associate Chair (AC) of CSCW 2025.

- June 9, 2024: Associate Chair (AC) of CSCW 2024.

- May 20-21, 2024: Attended Midwest Machine Learning Symposium (MMLS), 2024.

- May 09, 2024: Became Ambassador for ICWSM.

- April 15-16, 2024: Attended Midwest Speech and Language Days (MSLD) 2024 at the University of Michigan, Ann Arbor to give a talk.

- 🏆 March 28, 2024: Received Graduate School Summer Research Grant Award.

- February 6, 2024: Guest lecture Natural Language Processing, Purdue University.

- December 13, 2023: Passed prelim and became Ph.D. candidate.

- August 08-10, 2023: Attended AIES-2023, Montreal, Canada.

- June 05-08, 2023: Attended ICWSM-2023, Limassol, Cyprus.

- 📝 May 05, 2023: Long paper accepted in AIES-2023 as oral presentation.

- 📝 May 02, 2023: Long paper accepted in Findings of ACL 2023.

- 🏆 April 14, 2023: Received Graduate Teaching Award from Purdue CS department.

- December 17-20, 2022: Attended IEEE BigData 2022, Osaka, Japan (Virtually).

- December 7-11, 2022: Attended EMNLP 2022, Abu Dhabi, UAE (Virtually).

- 📝 October 24, 2022: Regular paper accepted to publish in IEEE BigData 2022.

- 📝 July 16, 2022: Long paper accepted to publish in ICWSM-2023.

- June 06-09, 2022: Attended ICWSM-2022, Atlanta, Georgia.

- 🏆 May 9, 2022: Received ACM-W scholarship to attend ICWSM’22, a one-time award.

- May 9, 2022: Received ICWSM2022 travel grant.

- 🏆 April 11, 2022: Received Graduate School Summer Research Grant Award.

- 📝 April 7, 2022: Long paper accepted to publish in NAACL 2022.

- 📝 July 15, 2021: Long paper accepted to publish in ICWSM-2022.

- June 08-10, 2021: Attended ICWSM-2021, Atlanta, Georgia (Virtually).

- 🏆 March 30, 2021: Received Graduate School Summer Research Grant Award.

- 📝 March 15, 2021: Long paper accepted to publish in ICWSM-2021.

- January 27-29, 2021: Attended IEEE ICSC 2021, Irvine, California (Virtually).

- December 10-13, 2020: Attended IEEE BigData 2020, Atlanta, Georgia (Virtually).

- 📝 December 06, 2020: Short paper accepted to publish in ICSC-2021.

- August 31, 2020: Submitted Ph.D. Plan of Study.

- December 17, 2019: Passed Ph.D. qualifier.